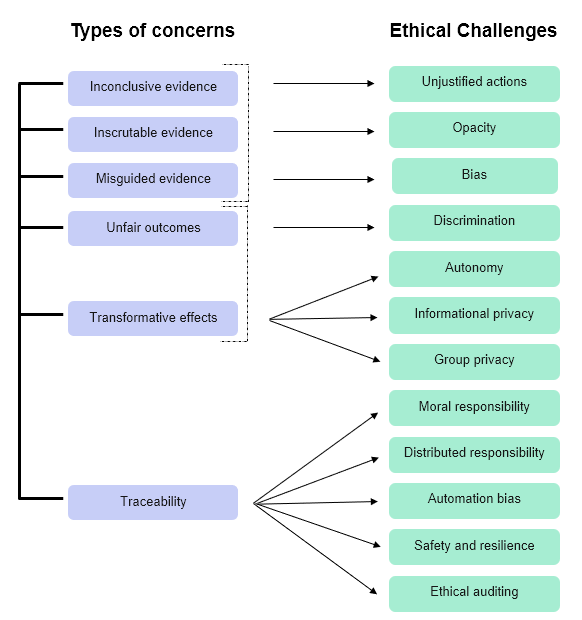

Artificial Intelligence (AI) has become an integral part of modern technology, transforming industries and influencing decision-making across various fields. However, along with its benefits, AI also brings several ethical challenges that need careful consideration. Understanding these challenges is crucial to ensure AI systems operate fairly, responsibly, and transparently. Here, we explore some of the most common ethical concerns related to AI and how they impact individuals, organizations, and society as a whole.

1. Bias and Fairness

One of the most pressing ethical concerns in AI is bias. AI systems learn from data, and if that data contains biases, the AI can reinforce and even amplify those biases. This can lead to unfair treatment of certain groups, especially in areas like hiring, lending, and law enforcement. For example, an AI-powered hiring tool trained on historical employment data might favor male candidates over female candidates if past hiring patterns were biased. Ensuring fairness in AI requires careful selection of training data and continuous monitoring for unintended biases.

2. Transparency and Explainability

AI decision-making is often complex and difficult to understand, leading to concerns about transparency. Many AI models, especially deep learning algorithms, operate like “black boxes,” making it hard to explain how they reach their conclusions. This lack of explainability can be problematic in high-stakes scenarios such as medical diagnoses or criminal justice, where individuals have the right to understand the reasoning behind decisions that affect them. Improving AI transparency involves developing models that provide clear explanations for their outputs and making AI systems more interpretable.

3. Accountability and Responsibility

As AI systems become more autonomous, determining accountability when things go wrong becomes a challenge. If an AI-driven system makes a harmful decision, who is responsible? The developer, the company using the AI, or the AI itself? The issue of responsibility is especially critical in self-driving cars, automated medical treatments, and AI-driven financial trading. To address this, clear legal and ethical frameworks are needed to assign responsibility and liability for AI-related actions.

4. Privacy and Data Protection

AI relies heavily on data to function effectively. However, collecting, storing, and analyzing large amounts of personal data raises serious privacy concerns. Many AI systems, such as facial recognition technology and personalized advertising algorithms, process sensitive information that could be misused or exposed to cyber threats. To protect privacy, organizations must implement strong data protection measures, ensure compliance with regulations, and give users more control over their personal data.

5. AI and Employment

Automation through AI is changing the job market, creating new opportunities but also displacing many traditional roles. While AI can improve efficiency and productivity, it can also lead to job losses in industries where automation replaces human workers. Ethical concerns arise regarding how businesses and governments should manage this transition to minimize negative impacts on workers. Upskilling and reskilling programs, along with policies that support displaced workers, are essential to ensure a balanced and fair workforce transition.

6. Security and Misuse of AI

AI technologies can be misused for harmful purposes, including deepfake creation, automated hacking, and misinformation campaigns. The ability to generate realistic fake videos, manipulate online content, and conduct cyberattacks using AI poses significant security risks. Governments, businesses, and technology experts must work together to create safeguards against AI misuse. This includes developing detection tools, enforcing strict regulations, and promoting ethical AI development practices.

7. Traceability and Trust

Another overarching concern in AI ethics is traceability—the ability to track and understand AI decision-making processes. Without traceability, it becomes difficult to assess whether AI systems are operating ethically and lawfully. AI models should be designed with built-in tracking mechanisms that record how decisions are made, providing accountability and increasing public trust in AI technologies. This is especially important in critical applications such as healthcare, law enforcement, and financial services.

Conclusion

AI has the potential to greatly improve human life, but it also comes with significant ethical challenges. Addressing these challenges requires collaboration between researchers, developers, policymakers, and society. By prioritizing fairness, transparency, accountability, privacy, security, and trust, we can create AI systems that benefit everyone while minimizing harm. As AI continues to evolve, ongoing discussions and ethical considerations will be essential to ensure responsible and ethical AI development.